Automating tests with Vercel and GitHub actions

I want the services I'm building to work for everyone, all the time.

Some context

Hosting sites on Vercel gives me a preview URL every time I create a pull request. I love that feature - it means I can manually inspect the real-world effects of my changes before pushing them to the main branch and the live site.

Testing is boring though, and I don't trust myself to run through a long checklist every time I tweak a component just in case it breaks something important elsewhere. Running through those tests should be a job for machines, not me.

Instead of running those checks myself, I've used Vercel previews and GitHub actions to automate the accessibility and end-to-end testing for theblackhart.co.uk.

Accessibility tests

Pa11y

Pa11y is a CLI tool which runs a suite of basic accessibility checks against a URL, returning a set of warnings and hints if errors are detected. Using pa11y-ci with the --sitemap flag, a whole sitemap can be tested at once, failing if any of the pages fail to meet accessibility baselines.

Building a sitemap to test against

Writing a static sitemap with a few URLs would be relatively simple, but because we're adding new content all the time, we'd like our sitemap to know about every page without the need to manually update it.

To generate that sitemap automatically, I've adapted a code snippet from Lee Robinson's blog. This version includes any pages or blog posts which might be dynamically generated by content in Prismic, using the same logic as the pages themselves.

The generate-sitemap script is run every time the site is built, and saves the resulting xml in the /public directory alongside a static robots.txt file.

An environment-aware sitemap

It's not just the list of paths which can vary though. Our build could be taking place in all sorts of environments (local development, previews for a PR, or production), and the URL we test against should reflect that. If we have a new version of the site running at localhost:3000, we shouldn't point our tests to a sitemap where URLs start with theblackhart.co.uk.

Taking advantage of some more vercel magic, we can modify the base of the URL in the script according to the VERCEL_ENV environment variable, as follows:

const baseUrl = {

production: 'https://theblackhart.co.uk',

preview: process.env.VERCEL_URL,

development: 'http://localhost:3000',

}[process.env.VERCEL_ENV]

Great! We now have relevant sitemaps associated with every version of the site, listing the most up-to-date set of pages.

Pointing our pa11y-ci command at a preview URL will now give us a full description of any accessibility failures on that complete version of the site.

As a bonus, generating a sitemap like this also makes the site more indexable by search engines like Google!

End-to-end testing

Accessibility tests are great, but they're not enough on their own. We also need to know whether the site actually operates the way we expect it to.

Component-based testing is the most common tool for this task, but testing components and not how they ultimately fit together leaves room for all sorts of holes in functionality.

Instead, using a combination of playwright and jest, I can mock real users' behaviour against a complete, working version of the site.

For example, the following snippet describes a user story comprised of surprisingly readable actions and checks - we go to a specific page, click a button, and check that the resulting state is what we expected:

describe('As a customer, I want to add products to my basket so that I can buy them', () => {

test('Can add an item to the basket', async () => {

await page.goto(`${shopUrl}/ring-sizer`)

await page.click('text=add to basket')

expect(await page.url()).toBe(basketUrl)

await page.waitForSelector('[aria-label="basket items"] >> li', {

state: 'attached',

})

const basketItems = await page.$('[aria-label="basket items"] >> li')

expect(basketItems.length).toBe(1)

})

})

It's worth emphasising that the underlying components never appear in these tests, because ultimately, they're not important. The tests should verify that users can do what they need to do on the site, not verify that my code is clever.

Testing this way also allows the underlying implementation to change according to my needs as a developer, without having to endlessly rewrite tests; I could completely rewrite the site using the hottest new framework but as long as my users can still add items to their basket, the tests should pass.

A long list of user stories can be run this way using a single command, returning a pass/fail status based on the complete suite. Using the same logic as for the sitemap generation, we can also specify which base URL the tests should run against, according to the environment where the command is being run. Local tests are run against a local URL, previews against previews, etc.

Running the tests

These test suites alone have already sped up the development process. Running yarn pa11y or yarn test gives me an immediate sense of where there might be problems in the code I'm writing, and being able to point the tests at any versions of the site feels very neat.

Automating tests with GitHub actions

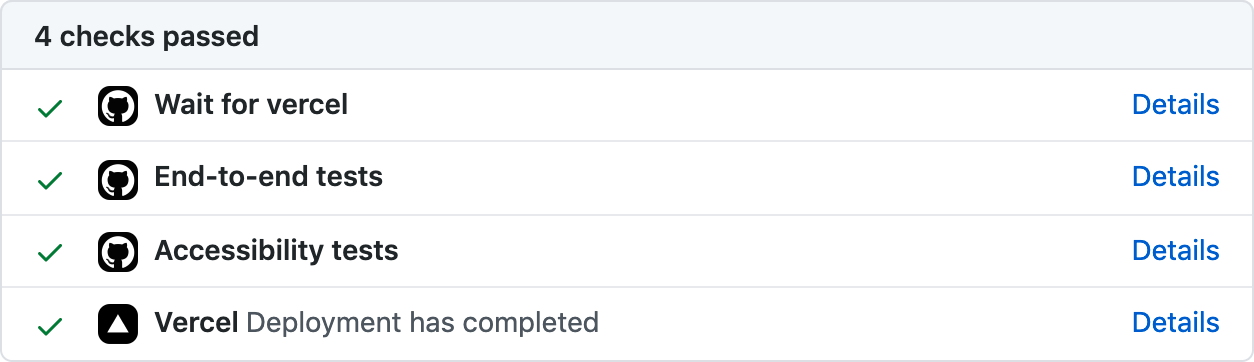

What I've described so far is nice, but still requires that I remember to run these tests on my own before I deploy. By wrapping up the test commands in a github action which is set to run on every new pull request, I can finally turn off that part of my brain and focus on the code.

The only remaining hurdle is the lag between the creation of a pull request, and the preview version of the site being published. Vercel needs some time to build the site and until the preview is live, the tests can't run.

Using a community github action from patrickedqvist, the tests are held off until the preview URL has been published. When it's live, the action makes the preview URL available to the rest of the workflow, where it's picked up and used by each of the subsequent jobs.

The entire workflow is laid out in the snippet below: First we wait for the preview URL from vercel, then we run our end-to-end and accessibility tests in parallel.

name: ci

on:

pull_request:

branches: [main]

jobs:

wait_for_vercel:

name: Wait for vercel

runs-on: ubuntu-latest

outputs:

preview_url: ${{ steps.waitForVercelPreviewDeployment.outputs.url }}

steps:

- name: Wait for Vercel preview deployment to be ready

uses: patrickedqvist/wait-for-vercel-preview@master

id: waitForVercelPreviewDeployment

with:

token: ${{ secrets.GITHUB_TOKEN }}

max_timeout: 120

tests:

name: End-to-end tests

needs: wait_for_vercel

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- uses: actions/setup-node@v1

with:

node-version: 14.x

- uses: microsoft/playwright-github-action@v1

- run: npm install --include=dev

- run: npm test

env:

VERCEL_URL: ${{ needs.wait_for_vercel.outputs.preview_url }}

VERCEL_ENV: preview

pa11y:

name: Accessibility tests

needs: wait_for_vercel

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- uses: actions/setup-node@v1

with:

node-version: 14.x

- run: npm install -g pa11y-ci

- run: pa11y-ci --sitemap ${{ needs.wait_for_vercel.outputs.preview_url }}/sitemap.xml

If everything passes, the PR can safely be merged without breaking anything on the site - if not, we have a set of descriptive error messages which tell us what needs to be changed before the site can be deployed.

This workflow has been lovely in practice - it's caught plenty of tiny errors on the content and code sides of the build already.

The tests aren't a silver bullet by any means, but I sleep much more soundly knowing that the code I've written passes some baselines of accessibility and usability.

More than anything, offloading the responsibility for running the tests to a machine has allowed me to focus more on what the tests actually do, and how I can write code that really works for users.